The Difference is in the Data

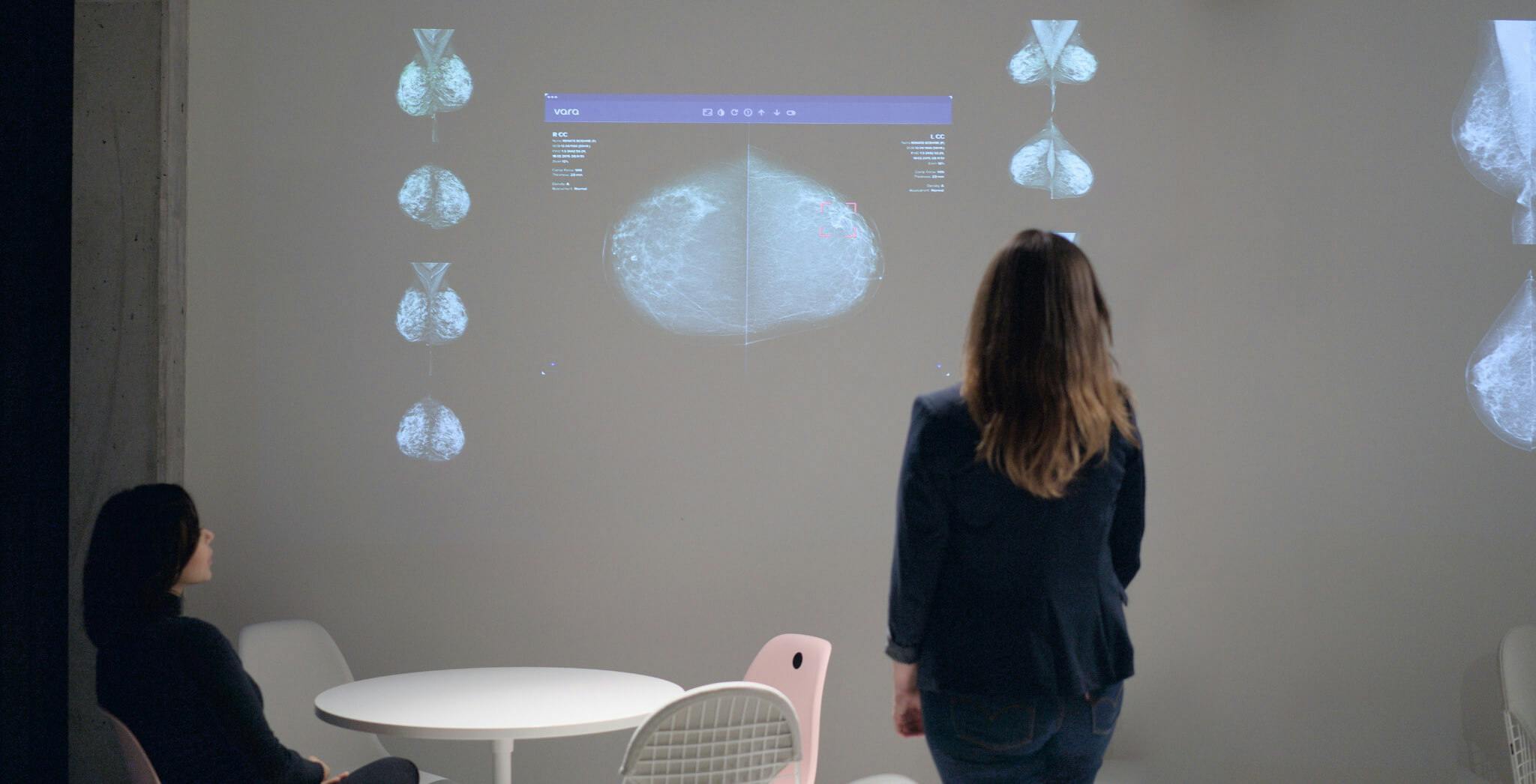

We're continually working alongside our partners to monitor AI predictions, review radiologist-AI interactions, and find the Decision Referral configurations that are right for you.

This ongoing committment to accuracy, efficiency, and excellence ensures that we’re always optimizing the strengths of AI and radiologists for meaningfully, better outcomes everywhere.

We can tailor the algorithm to the specific needs of the user. For example, should the focus be on improving sensitivity or specificity?

Thus, we are able to combine the strengths of humans and AI. Learn more about how this influences the metrics.

We constantly observe the interaction between radiologists and our AI.

Once per quarter, we sit down with each radiologist and review their interactions. We check how often they overrule the AI’s recommendation, how often the safety net helped them, how often they discarded the safety net — and we check it all against final clinical insight from the biopsies.

We also review cases of disagreement together and use our findings to learn and improve.

We constantly monitor our AI's predictions and check that they're within expected ranges.

For example, we observe and compare the distribution of prediction scores for biopsy-proven malignancies (violet) and studies that did not get reviewed during the consensus conference (blue).

By doing this, we can ensure that the performance we've measured retrospectively is delivered in actual clinical use.

We also react quickly to unexpected events if required — and we're linked to the cancer registry to obtain final cancer diagnoses via biopsy results.